Though robots may not be arguing cases in court any time soon, AI is already having a strong influence on the judicial system in the United States and further afield, according to a recent panel discussion hosted by the AI Alliance of Silicon Valley.

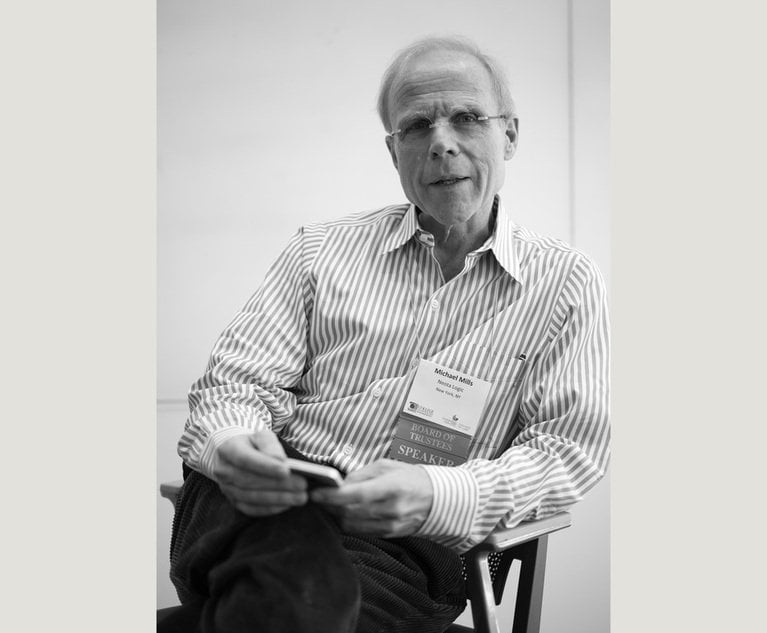

Legal leaders from both sides of the Pacific came together June 29 in Half Moon Bay, to discuss artificial intelligence in law at the US-China AI Tech Summit.

Credit: Zapp2Photo/Shutterstock.com

Credit: Zapp2Photo/Shutterstock.com