As the debate continues over the bias of algorithms and artificial intelligence tools used in important matters ranging from loan approvals to prison sentences, the San Francisco District Attorney’s Office is taking a different approach. Last week, the office announced it is turning to an artificial intelligence-powered bias mitigation tool to redact any race-specific language before a police officer’s incident report hits a prosecutor’s desk.

The software is an effort to remove implicit bias from prosecutors’ charging decisions. But observers say that monitoring of the program will be needed as algorithms are only as objective as the information they are programmed with.

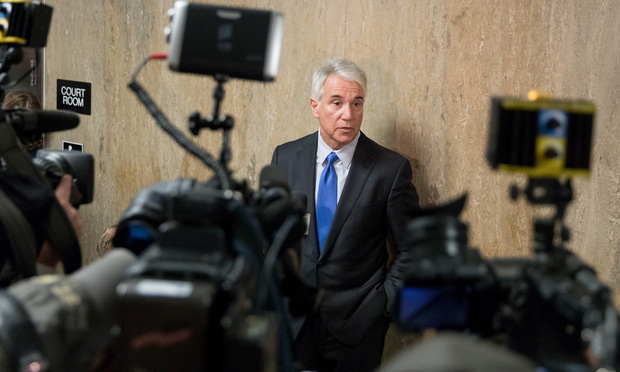

San Francisco District Attorney George Gascon at the sentencing of Jose Zarate.

San Francisco District Attorney George Gascon at the sentencing of Jose Zarate.