Featured Firms

Presented by BigVoodoo

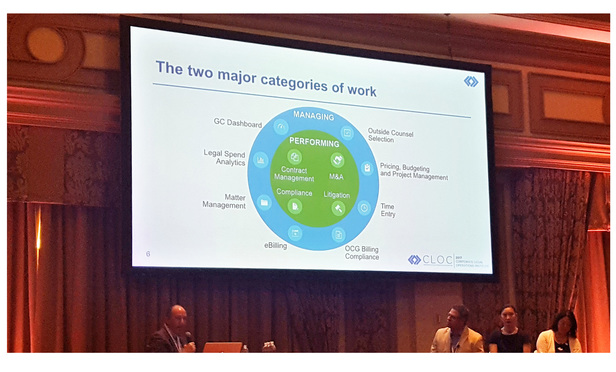

CLOC panelists assess their organization's issues adopting artificial intelligence and how they've overcome them.

May 09, 2017 at 03:17 PM

1 minute read

The original version of this story was published on Law.Com

Presented by BigVoodoo

The premier educational and networking event for employee benefits brokers and agents.

The Legal Intelligencer honors lawyers leaving a mark on the legal community in Pennsylvania and Delaware.

Consulting Magazine recognizes leaders in technology across three categories Leadership, Client Service and Innovation.

A large and well-established Tampa company is seeking a contracts administrator to support the company's in-house attorney and manage a wide...

We are seeking an attorney to join our commercial finance practice in either our Stamford, Hartford or New Haven offices. Candidates should ...

We are seeking an attorney to join our corporate and transactional practice. Candidates should have a minimum of 8 years of general corporat...

MELICK & PORTER, LLP PROMOTES CONNECTICUT PARTNERS HOLLY ROGERS, STEVEN BANKS, and ALEXANDER AHRENS